For the past few months I have been working hard on a fantastic project and I'm happy to announce that the final stage is now live of the Perth Royal Show, check it out athttp://www.perthroyalshow.com.au

Read MoreInformation Services Management personal reflection of a group share tool

Using the group share tool was a valuable tool for our group work. It acted as a central hub for information for our group, not only on current work, but also contact and member details.

Read MoreCURRY An integrated functional and Logic Programming Language

CURRY is an experimental programming language which combines elements of the functional and logic programming paradigms. Based on Haskell, Curry was created to provide a common language for the research, teaching and application of integrated functional logic languages.

Read MoreWhy security is important and how it relates to risk

Reducing the risk of a computer system attack can be achieved by making the system secure. But what is a secure computer system? Computer security is about lowering the risk of the system and ensuring that something can not happen rather than it can i.e. certain users can not access the word document. Security is also about control and creating layers of defence to reduce the risk of a malicious attack. A secure computer system is a system which achieves the three goals of computer security: confidentiality, integrity and availability.

The chances of a confidentiality breach in a system increases when access to the system is not monitored (through logs) or restricted (through ID and password or a biometric method) this leaves several vulnerabilities in the system ie if there is a breach not only is it easy to get into with no login there is no way of telling who attempted or when. Therefore by creating a barrier and restricting access the likelihood of someone entering the system will be reduced. Another example is patients’ records in a hospital, where security is imperative. Security would need to ensure that only authorised persons can access the protected data.

Integrity of the system is essential in relation to risk. Much like confidentiality, integrity of data is best kept by controlling who or what can access the data and in what ways. It involves keeping the data “original” and modified in acceptable ways by the correct people and processes. The example of a hospital environment can be used again since it is important that patient records are kept accurate when making medical decisions. The risk of an intruder entering the system and committing computer fraud, ie: altering patient details, is high unless protective barriers are created.

Availability is the final layer of security: the system must be in working order and usable. Availability focuses on the readiness of the system to perform and its capacity (items such as memory and connection speeds). If there is unavailability in the system or if some or all users are unable to access the system and perform required tasks efficiently and effectively (even authorised users) then the system is still at risk.

Having said this it is impossible to achieve one hundred percent security in today’s complex computer environments. Instead most security systems, specifically malware detection or ‘virus’ software such as Norton Anti-Virus focus on the idea of cutting the risk of being attacked. By building up protective layers to deter the attacker the security works on a “rob the next door” theory. This means that if the system has a lot of protection layers then the attacker will find it too difficult to compromise the system and instead will try another computer or ‘next door’.

Security plays an important role in our every day life and therefore failures of a security system have damaging effects. An example of this is Microsoft’s operating system called Microsoft XP which was released in 2001. The new operating system was a good target for attackers and Microsoft had been scrutinised for its lack of security in the system. The system had poor security this meant there were a lot of vulnerabilities or holes in the system and the risk of an attack compromising the systems data increased. In 2004 an update was released called Service Pack Two. Microsoft spent nearly US1 billion dollars creating this service pack proving the importance of security in computers. (Linn, 2004)

The definition of risk includes the impact of the attack. The impact of an attack has a range of consequences from endangering people’s lives and the environment such as a nuclear control stations to economic and monetary effects such as bank systems and home computers. This makes security a very important matter.

Security is important because it keeps a computer functioning. We (as computer users) need to ensure that a regular virus scans are performed and importantly regularly download the virus definition updates. In doing this any known vulnerabilites existing in the computer are minimised and therefore decreases the risk of a malicious attack. From this we can see that security is a way of reducing the probability of a threat.

Computer Security and Layers just like Shrek's onions

Security is focused on creating layers. An obstacle course for attackers which means they have to hop and climb over and hopefully will give up and attack another computer, making it another person’s problem. Minimising risk is an important goal in achieving a secure system. It is important that whilst creating a secure system the system is not put into a lock down state. This creates a system with no functionality or usability and by definition not a secure system. It is important that users of computer systems stay informed and are aware of how to protect there systems. Microsoft’s new operating system Vista has promised new and exciting features, hopefully it will not be a repeat of Windows XP and its security problems and we can work with secure, functional and easy to use systems.

More software licences than the Microsoft's customer record shows

Microsoft informed a company that based on its calculations, it estimates the company should have more software licenses than Microsoft’s customer record shows.

The proposed situation shows an ongoing problem, the copying and sharing of software and music over the internet is a problem which software vendors and the music industry have been dealing with for over 10 years.

The lack of software licenses that Microsoft does not have relates to the copying and sharing of software. An approach to reduce copying of software would be to enforce the update that Microsoft released in its ‘automatic’ updates. The Windows Genuine Advantage (WGA) is a program that rewards legal use of Windows XP free content and punishing resellers and users of illegal copies of XP by limiting their access to security fixes, downloads and other updates.

To calculate risk exposure the risk impact is multiplied by the risk probability.

A journal article on Proquest states that the estimated annual loss on counterfeit copies of Microsoft products is 30 billion dollars. The risk of someone downloading a copy of Microsoft software is hard to calculate but assume there is a 50% chance (which in reality is much greater) this calculates to a risk exposure of 15 billion dollars. This essentially means that any money spent bellow 15 billion dollars is a worthwhile investment in reducing the risk of the public obtaining Microsoft’s software. This means Microsoft has 15 billion dollars to avoid the risk such as changing requirements for obtaining a software license, transfer the risk by allocating the risk to other people or systems. Finally Microsoft could assume the risk by accepting the loss.

An approach to reduce copying would be to reduce the availability of downloading copied software. This is a very difficult as just as a p2p software company get sued and shutdown. New reincarnations of the old software appear which gains popularity examples include Napster, KaZaa, Morpheus and Grokster. By reducing the variety of methods available for downloading illegal software Microsoft would reduce the risk of new users joining the many people downloading illegal software.

Spam Email is a serious problem

Email is a cost effective form of communication both for business and for individual users. Spam is a term used to refer to unsolicited email, sent by spammers. Spam is a major problem this is evident in the large amounts of spam email that is sent everyday. For example a large ESP (email service provider) such as hotmail can receive up to one billion spam email messages a day. The threat spam email creates can be placed into four main categories: loss in productivity, increased potential to virus attack, reduced bandwidth issues and potential legal exposure. This report will discuss how spam email can be controlled from both an individual and a business perspective. Spam email makes up approximately 80% of email messages received creating a large burden on ESPs and end users worldwide. (Messaging Anti-Abuse Working Group, 2006)

The main method of controlling spam email for both individual users and organisations is through machine learning systems, which can be seen in figure 1.1. Controlling spam is not a static issue spam protection methods must be constantly updated and changed. Most forms of spam protection begin with a filter which separates incoming mail into two folders. The filter operates from learnt memory or a blacklist, the filter puts the mail into the quarantine box if on the blacklist or into the inbox if considered genuine email. If spam mail is placed into the inbox, users have the ability to place the mail onto the blacklist adding to the accuracy of what is regarded as spam. If spam mail does get into the inbox recipients of spam or suspected spam it is important that end users don not open the email and do not click any links. Even if a html link refers to opting out or unsubscribing as this only confirms the email address is ‘alive’ and the spammer will send more nuisance email.

Memory has to constantly keep learning what is ‘good’ and ‘bad’ email. Spammers are not idle whilst machines are learning new ways of fighting against spam must be created. For example more sophisticated learning algorithms which give a weighting for each word in an email message. This allows a new filter to be learnt from scratch in about an hour even when training on more than a million messages. Spammers got around new filters changing the common words such as ‘sex’ and ‘free’ which are regarded as having a heavy weighting and encoding them as HTML ACSII characters ie (frexe) this allows the user to still see the words but computers can not detect the words and the email is incorrectly classified and placed into the inbox.

Most organisations have internally managed spam filters but spending extra money on spam control by outsourcing email security has many benefits. Spam messages do not simply stop because the 9 till 5 IT staff have gone home. If a new spam threat is identified at night the damage can be done long before IT staff arrive. If organisations join together support can be provided in a much more cost effective manner. Organizations such as the Messaging Anti-Abuse Working Group (MAAWG) have been created to fight against spam focusing on a collaborative effort. MAAWG incorporates major Internet Service Providers (ISP) and network operators worldwide with other associated industry vendors such as google and yahoo. Fighting against spam together allows black lists to monitored, upgraded and maintained just as quickly if not quicker than spammers can create new attacks.

Securing computers with virus and spyware software is a way which spam can be reduced. This minimises the amount of spam email sent using the common technique of creating zombie machine or botnets. Computers which are owned by end-users are infected with viruses or Trojans that give spammers full control of the machine, which are then used to send spam. The spammer’s methods used to send the email is very sophisticated and results in email, even with blocked port 25 (outgoing email port), to be sent out.

Another way spam email can be reduced is disguising email addresses on forums or bulletin boards. This makes it more expensive for a spammer to send emails. As more money is needed to gather valid email addresses. This will help in achieving the goal of making the cost of sending spam email below break even point ie the money gained from sending spam is less than the cost of sending it.

In addition to having spam filtering on a high level end users can create there own personal levels of filtering. The majority of email clients not only have junk mail folders but folder rules can be created which allow email to be sorted into folders specifying words, senders or subject line. This can help keep spam out of sight and become less of a nuisance.

Biometric Security and its importance in the future

Biometric security could play an important role in securing future computer systems. Biometric security provides identification of users through something the user is; through measurement of physical characteristics such as fingerprints, retinal patterns and even DNA (biometric, Online Computing Dictionary). Authentication can be achieved in a variety of ways. One of the most fundamental and frequently used methods used today are passwords or pin codes. This can be categorised as something the user knows. Another category is something the user has; this method usually involves issuing physical objects such as identity badges or physical key (Pfleeger, 2003) A relatively new method of authenticating is through biometrics. This report will discuss the limitations and strengths of biometric security. Furthermore this report will compare biometrics to the other qualities that authentication mechanisms use; something the user knows and something the user has. It will not delve into the description of the biometric measures instead discussing the benefits and problems with biometric security. When a biometric security system is implemented several things are required. The user is scanned into the system and the main features of the object scanned are then extracted. A compact and expressive digital representation of the user is stored as a template on the database. When a person attempts to enter the system they are scanned and the main features of the object scanned are then extracted and converted into a digital representation. This file is then compared to the templates on the database. If a match is found the user is granted access to the system. (Dunker, 2004). A disadvantage of the template style design which is what most biometric devices use. It allows an attacker to gain entry into the system by intercepting and capturing the template file and then gaining authentication by entering the file into the communications line spoofing the system into being an authorised user.

Another drawback of this design is it requires personal data such as DNA, thumb prints and other sensitive data saved as template files on database computers. Which creates a greater security risk and privacy issues such as, who has access to this data, these questions and other similar questions would have to be covered if biometric system was implemented.

Biometrics allow for error when scanning the user to provide better functionality and usability for its users. A FAR (false acceptance rate, which is the probability of accepting an unauthorised person user) and FRR (false rejection rate which is the probability of incorrectly rejecting a genuine user) are made to avoid the inconvenience of being a genuine user but being denied access. Assuming these rates are set correctly this allows biometric devices to differentiate between an authorised person and an impostor. (Itakura, Tsujii, 2005, October)

Biometric devices could create a more ambiguous and user friendly environment for its users. Lost or stolen cards and passwords can cause major headaches for support desks and its users. This problem is irradiated in biometric security since it is practically impossible for a user to forget or leave their hand or eye at home. Also other forms of identification methods which rely on the user remembering a password or a user carrying an object such as a smart card are easier to compromise compared to biometrics. For example approximately 25% of ATM card users write the PIN on their ATM card thus making the PIN security useless. (Dunker, 2004) Since biometric devices measure unique characteristic of each person, they are more reliable in allowing access to intended people. Resources can then be diverted into other uses, since they are not being wasted on the policing of purchased tickets or resetting passwords. A example from our local area would be the recent upgrade of the Transperth system to smart cards means security guards can focus on keeping people safe instead of checking tickets and issuing fines.

Biometric security is not a new form of security; signatures have provided a means security for decades. But the measuring of human characteristics such as finger prints and iris scanning using computer systems is a new security method. Because this new form of biometrics is in its preliminary stages, common development issues occur. Implementation of biometrics due to expense and lack of testing in real world situations means biometrics can not be used today. Although once these “teething stages” are overcome biometrics could become a powerful method in security. (Dunker, 2004)

Imagine a scenario were you are your own key to everything. Your thumb opens your safe, starts your car and enables access to your account records. This could seem very convenient. However once biometric security is attacked you can’t exactly change what your finger print or change your DNA structure. And since your biometric data is not a secret as such, as you touch objects all day and your iris scan can be collected from anywhere you look. A large security risk is created if someone steals your biometric information as it remains stolen for life. Unlike conventional authentication methods you can not simply ask for a new one. (Schneier, 1999)

Biometrics could become very useful but unless handled properly are not to be used as keys, as keys need to be secret, have ability to be destroyed and renewed, at the present stage biometrics do not have these qualities. Although still in its primitive stages a proposal for biometric authentication based on cryptosystem keys containing biometric data by Yukio itakura and Shigeo Tsujii enables biometric devices to be secure and more reliable when used as a key. This system works by generating a public key from two secret keys, one generated from the hash function of the biometric template data another secret key is created from a random number generator, as seen below (Itakura, Tsujii, 2005, October)

In conclusion biometric devices are defiantly a viable option in the future. But as discussed have several issues that need to be dealt with before real world installation will occur. Biometric devices give its users ambiguity and trouble free authentication but also at present time have certain security loop holes that need to be dealt with.

1.1 References

Itakura, Y., Tsujii, S. (2005, October) Proposal on a multifactor biometric authentication method based on cryptosystem keys containing biometric signatures. International Journal of Information Security. Heidelberg (4)4, 288

Jain, A., Hong, L., Pankanti, S. (2000, Feb). Biometric identification Association for Computing Machinery. Communications of the ACM. New York (43)2, 90

Pfleeger. C. P & Pfleeger, S.L. (2003) Security in Computing 3rd Ed, Upper Saddle River, New Jersey, Prentice Hall Professional Technical

Schneier, B. (1999, Aug) The uses and abuses of biometrics Association for Computing Machinery. Communications of the ACM. New York (42)8, 136

Weinstein, L. (2006, April) Fake ID; batteries not included Association for Computing Machinery. Communications of the ACM. New York: (49)4, 120

How changes in technology affect security.

Technology is just about everywhere and as time progresses the advancement of technology is creating new problems making it harder to create a true secure environment. Computers are devices used to solve practical problems which makes them part of technology. Changes in technology create risks that are almost impossible to predict and are not understood. With almost all pieces of technology a new security issue or vulnerability is created. With that change an attacker will think of a way to act upon the vulnerability and compromise the system.

Data security has always been an issue and in the earlier days of computers with diskettes the focus was on confidentiality, integrity and availability and has never changed. The recent thumb drive boom is an example of a hardware technology affecting the methods of securing a system. Thumb drives give thieves or attackers the ability to move a large chunk of data virtually undetected and enables them to install malicious software. To combat this problem organisations have banned their use or disabled their mounting by ordinary users, others have disconnected physically the USB ports inside the computers and even filling the USB sockets with epoxy glue these new methods of solving a data security issue have adapted as demand grew for thumb drives and security problems became evident.

As technology alters (for hopefully the better) attacker do as well. With each new release of technology vulnerabilities on a system are created and this creates targets for attackers. This is most likely due to the fact that no amount of prototyping can compare with having the new technology functioning in the real world. This is evident in 2001 when Microsoft released its new operating system XP. For a number of years Microsoft was criticised for lack of security features (such as poor firewall and spyware protection) it took almost three years for an update to be released. In the three years that security was not available; vulnerabilities were discovered and acted upon which made ActiveX, which is an update which allows Microsoft to communicate with other networks, to spread viruses, trojan horses and other malware

It is important to not that not all changes in technology affect security or the methods of security. In a recent press release by Intel a new processor family called Mobile Penryn was released. These processors have quad cores (four) are twenty five per cent smaller and achieve faster speeds (have large cache) on less power staggering achievements on all accounts. This advancement in technology is probably not going to create hugely noticeable security issues since it is fundamentally just increasing the speed of the processor.

What does this mean for us? Maybe new names for new breeds of security systems and malware, the line between the different types of malware have become quite thin. What we do know though is the principles applied to making a system safe, confidentiality, integrity and availability have not been affected by technology. Instead the methods of achieving a secure system have been shaped by the constantly altering computer environment we live in.

Relationship between system, functionality and system usabiliy the never ending balance

Vulnerabilities in a system create risks; risk management is about avoiding, transferring or assuming these risks. Risk management must also take the context of where the system is being used for example a one hundred thousand dollar security system is useless in a home network since a home network would not cost anywhere near that figure. If too little control is implemented to reduce risk then the system will become insecure and have a high risk of being attacked. If too much security is implemented then system functionality and usability will reduce. Risk management becomes a three way see-saw balance, if just one security element is focused on then the system will lack in one or both of the other elements but if a balance of all three elements are used then the system can become a secure, functional and easy to use. To reduce potential threats security software is installed on machines. Since all computer systems setups are different the defaults that virus and firewall programs have are simply not enough for effective security. Users must protect themselves, a virus and firewall program my have all the needed functionalities of setting up a secure computer. A recent survey but the American Online and National Cyber Security Alliance found that out of 329 homes 67% either had no anti-virus software on their system at all or had not updated it within the previous week. These statistics show that knowledge of how to protect your computer from malware is poor. Consequently if user can find, understand and use the security features imbedded in the software by having good usability the user will utilize the full functionality of the software and create a secure system.

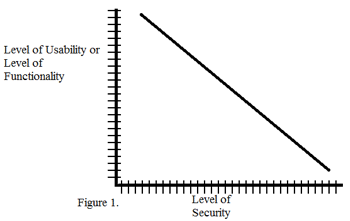

Focusing heavily on a secure system reduces the functionality and usability of the system. For example having a complete firewall block of incoming and outgoing data makes the system very secure since no harmful data can get in. The firewall cuts of any internet of network connections that the computer might have had. The functionality of the network and internet connection still exists but because of the firewall block the usability of the network is non existent. Therefore if there is a heavy focus on the security of a system poor usability is created and through poor usability the functionality of the computer is drastically reduced as the user can only perform offline tasks this can be seen on the graph below.

Level of Security versus Usability or functionality

Figure 1 illustrates the negative correlation between: Usability and Security or Functionality and Security. Either the system has high functionality or usability but runs a risk of being attacked. The figure illustrates the trade off between the two sets of variables a high level of security will create a lower level of usability or functionality.

Focusing heavily on a functional and usable system can lead to a decrease in the security of the system. It could be argued that a functional and usable system would be a system which has no virus software or firewall at all and ignore the risk of an attack. Initially this could be a valid argument but after virus’ and other malware have made there way on to the system usability and the functionality of the system would slowly disintegrate. An intermediate spot needs to be found were the user is happy with the functionality and usability of the system but is not compromising on security.

Honeypots usages and prevention

Honey pots are used as additional levels of security, decoys which simulate networked computer systems designed to attract a hacker’s attention so they perform a malicious attack, honeypots can be either virtual or physical machines. Forensic information which is gathered from the compromised machine/s is often required to aid in the prosecution of intruders. It also gains an insight into the mind of an intruder, logs and other records on the machine which explain how the intruders probes and, if they were successful in entering the system, how they gained access. This information is very valuable and can be used as a learning tool for network administrators when designing creating or updating the computer system as are able to better protect the real neighbouring network systems because they are aware of exactly how common attacks occur. This report will discuss how honeypots are used and the types of malicious attacks that they can prevent. Honeypots place virtual machines at the unallocated addresses of a network from a single machine. The unallocated addresses appear as machines which have been placed on internet protocol (IP) addresses. Honeypots have the ability to place any operating system an IP address. Honeypots simulate the network stack behaviour (how the packets are encapsulated) of a given operating system, through the personality engine. Changes in the protocol headers of every outgoing packet match the characteristics of the operating system. This makes the machines appear genuine and is therefore desirable to attackers. because the network appears genuine the network could potentially confuse and be deterred by the virtual honeypots, as it could appear to large and to complex, furthermore any traffic on a honeypot machine gives early warning of attacks on other physical machines.

Honeypots also have the ability to redirect traffic or connections. This gives powerful control over the network and also makes the virtual network appear genuine. Redirection allows a request for a service on a virtual honeypot to be forwarded onto a service running on a real server. For example connections can be reflected back, this gives the potentially means a hacker could attack there own machine.

Honeypots are excellent tools when attempting to intercept traffic from computer users that randomly scan the network. Because of this honeypots are excellent at detecting malicious internet worms that use random scanning for new targets examples of these include Blaster, Code Red and Slammer. Once a worm has been found counter measures can be carried out against infected machines. Once the honeypot recognises a worm, virtual gateways block the worm from entering any further into the network.

Through the use of honeypots spam sending methods can be learnt and therefore spam can be reduced. Spammers use open mail relay and proxy servers to send spam to disguise the sender of the spam message. Honeypots can be used to understand how spammers operate and to automate the identification of incoming spam which can then be submitted to shared spam filters.

Honeypots are decoy servers that can be setup inside or outside of the demilitarised zone (DMZ) of a network firewall. If the honeypot computer is infected with a virus or Trojan, damaged can be created on other machines in the network and the virus could spread onto the ‘real’ system. As discussed, honeypots can help analyse current spamming methods. Honeypots also have the potential to add to the amount of spam email sent. Machines could become zombie machines or botnets which send out spam email automatically without user’s knowledge. Due to these issues it is important that honeypots are setup inside of the firewall for control purposes or are closely monitored. Otherwise the negatives may outweigh the positives.

Honeypots can be used as a tool when creating new methods of securing systems from malicious attacks. For example the program called BackTracker enables system administrators to analysis intrusions on their system. Honeypots were used to test how effective BackTracker was at analysis. The aid of effective and accurate testing is essential in creating any new system. Through the use of honeypots, programs such as BackTracker can be tested in a simulated scenario and then improved to ensure the system can efficiently and effectively work in the appropriate manner.

Watson, D. (2007, Jan) Honeynets: a tool for counterintelligence in online security

Network Security. Kidlington: (2007)1; 4

Nikolaidis, Ioanis (2003, June). Honeypots, Tracking Hackers. IEEE network (0890-8044), 17 (4); 5

King (2005). Backtracking intrusions. ACM transactions on computer systems (0734-2071), 23 (1); 51.

The Internet RFC 789 Case Study 4 – Faults and Solutions

Events like the ARPAnet crash are considered major failures the network went down for quite some time. With the management tools and software of today, the ARPAnet manager may have been able to avoid the crash completely if not at least detect and correct much more efficiently than what they did.

Drop Bits

The three updates created through IMP 50 were not checked thoroughly from protection against dropped bits. Increased planning, organising and budgeting would have been valuable to the network. Managers realised that not enough resources allocated and although resources were scarce CPU cycles and memory were allocated to different methods. Instead only the update packets as a whole were checked for errors. Once an IMP receives an update it stores the information from the update in a table. If it requires a re-transmission of the update then it merely sends a packet based on the info in the table. This means that for maximum reliability the tables would need to be checksummed also. Again this would not appear to be a cost effective option as checksumming large tables requires a lot of CPU cycles (Rosen, 1981).

Apart from checksumming, the hardware had parity checking disabled. This was because the hardware had parity errors when in fact there was not any. This is a common security problem having fail safe measures installed but just disabling them because they don not work correctly, instead the system should have been correctly set so it gave errors message correctly.

More checksumming may be detected the problem, but still this is not to say that checksumming will always be free of cracks for bits to fall through. Another option would have been to modify the algorithm used, but again this could have fixed one problem but allowed others to rise.

Performance and Fault management

The crash of ARPAnet was not specifically a technical fault instead it was a number of faults which added together to bring the network down. No algorithms or protections strategy’s can be assured to be fail safe. Instead the managers should have aimed to reduce the likely hood of a crash or failure and in the even of a crash have detection and performance methods in place that would detect if something was wrong earlier. Detection of high-priority processes that consume a given (high ie 90) percentage of system resources would allow updates to occur but still allow the up/down packets to be sent.

If the ARPAnet managers had been able to properly control the network through network monitoring tools which give reports on system performance it might have enabled them to respond to the problem before it became unstable and unusable. Network monitoring can be active, ie routinely test the network by sending message or passive, collecting data and recording it in logs) Either type might have been a great asset as the falling performance could have been detected allowing them to avoid fire fighting the problem ie trying to repair after the damage is done (Dennis, 2002). Having said this system resources as already mentioned were scarce and the sending and recording of data requires memory and CPU time that might not be available, instead some overall speed might have needed to be sacrificed to allow for network monitoring.

Other network management tools such as alarms could have also allowed the problem to be corrected much more efficiently by alerting the staff as soon as a fault occurred. Alarms software would not only have alerted staff earlier but would make it easier to pinpoint the cause of the fault, allowing for a fix to be implemented quickly.

Network Control

The lack of control over the misbehaving IMP’s proved to be a great factor in the down time. The IMP’s were a number of kilometres away from each other. Fixes and patches were loaded on to the machines remotely which too several hours because of the networks slow speeds. The lack of control of the networks allocation of resources we detrimental to the networks slow recovery. Even in a down state network moinoring tool would have been able to download software to the network device configure parameters, and back up, changing and altering at the mangers decision (Duck & Read, 2003).

Planning and Organising

The lack of proper planning, organising and budgeting was one of the main factors which cause the network to fail. The ARPAnet managers were aware of the lack of protection of dropped bits, but due to constraints in costs and hardware and a it wont happen to us attitude were prepared to disregard it.

Better forecasting and budgeting may have meant they would be able to put in place more checking, which could have picked up the problem straight away, only the packets were checked (checking the tables that the packets were copied onto was not considered cost-effective) this left a large hole in their protection. Obviously it was not considered a large enough problems considering it probably will not happen. Documenting the fact there was no error checking on table might have also reduced the amount of time it took them to correct the error (Dennis, 2002)

The RFC789 case study points out hey knew that bit dropping could be a future problem. If this is true then procedures should have been documented for future reference as a means for solving the problem of dropped bits. Planning could have severely cut, down time of the network.

Correct application of the 5 key management tasks and the network managing tools available today would have made the ARPAnet crash of 1980 avoidable or at least easier to detect and correct.

Better planning and documentation would have allowed the manger to look ahead acknowledging any gaps in their protection. They could have prepared documentation and procedures to highlight they do not have protection on certain aspects of the network.

Once the crash had occurred, uncertain decisions were made as to what the problem could possibly be. Planning, organising and directing could have helped resolve the situation in a more productive method than the fire fighting technique used.

The Internet RFC 789 Case Study 3 – How the ARAPA Crash occurred

An interesting and unusual problem occurred on October 27th, 1980 in the ARPA network. For several hours the network was unusable but still appeared to be online. This outage was caused by a high- priority process executing and consuming more system resources then it should. The ARPAnet had IMP’s (Interface Message processors) which where used to connect computers to each other suffered a number of faults. Restarting individual IMP’s did nothing to solve the problem because as soon as they connected to the network again, IMP’s continued with the same behaviour and the network was still down.

It was eventually found that there was a bad routing updates. These updates are created at least 1 per minute by each IMP and contain information such as the IMP’s direct neighbours and the average packet per second across the line. The fact they could not keep their lines up was also a clue and it suggested that the IMP’s were unable to send the line up/down protocol because of heavy CPU utilisation. After an amount of time the lines would have been declared down because the lines up/down protocol was not able to be sent.

A core dump (log files) showed that all IMP’s had routing updates waiting to be processed and it was later revealed that all updates came from the one IMP, IMP 50.

It showed that IMP 50 had been malfunctioning before the network outage, unable to communicate properly with its neighbour IMP 29, which was also malfunctioning, IMP 29 was dropping bits.

The updates which were waiting to be processed by IMP 50 had a pattern this was as follows: 8, 40, 44, 8, 40, 44….. This was because of the way the algorithm determine what the most recent update was. 44 was considered more recent then 40, 40 was considered more recent then 8 and 8 was considered more recent then 44. Thus this set of updates formed an infinite loop, and the IMP’s were spending all their CPU time and buffer space processing this loop. Accepting the updates because the algorithm meant that each update was more recent then the last, this was easily fixed by ignoring any updates from IMP 50; but what had to be found is how did IMP 50 manage to get three updates into the network at once?

The answer was in IMP 29, which was dropping bits. When looking at the 6 bits that make up the sequence numbers of the updates we can see a problem

8 – 001000

40- 101000

44- 101100

If the first update was 44, then 40 could easily have been created by an accidental dropped bit and again 40 could be turned into 8 by dropping another bit. Therefore this would make three updates from the same IMP that would create the infinite loop.

The Internet RFC 789 Case Study 2 – Network Management

Network managers play a vital part in any network system, the organisation and maintenance of networks so they remain functional and efficient for all users. They must plan, organise, direct, control and staff the network to maintain speeds and efficiency for all users. Once these tasks are completed the four basic functions of a network manager will be complete these are the network; performance, fault management, provide end user support and manage the ongoing costs associated with maintaining networks.

Network Managing Tasks

The five key tasks in network management as described in Networking in an Internet Age by Alan Dennis (2002, p.351) are:

Careful planning of the network which includes the following; forecasting, establishing network objectives, scheduling, budgeting, allocating resources and developing network policies.

Organising tasks which includes developing organisational structure, delegating, establishing relationships, establishing procedures and integrating the small organisation with the larger organisation.

Directing tasks- initiating activities, decision making, communicating, motivating

Controlling tasks establishing performance standards, measuring performance, evaluating performance and correcting performance.

Staffing tasks interviewing people, selecting people, developing people

It is vital that these tasks are carried out neglect in one area can cause problems later on down the track. For example bad organisation could mean an outage lasts double what it should, or bad decision making when creating the topology of the network and what communication methods to use could mean the network is not fast enough for the organisations needs even when running at full capacity.

Four Main Functions of a network manager

The functions of a network manager can be broken down into four basic functions

Configuration management; performance and fault management, end-user support and cost management. Sometimes the tasks that a network manager will perform can cover more than one of these functions, such as documentation the configuration of hardware and software, performance reports, budgets and user manuals. The five key tasks of a network manager must be done in order to cover the basic functions of a manger as this will keep the network working smoothly and efficiently.

Configuration management

Configuration management is managing a networks hardware and software configuration and documentation. It involves keeping the network up to date, adding and deleting users and the constraints those users have as well as writing the documentation for everything from hardware to software to user profiles and application profiles.

Keeping the network up to date involves changing network hardware and reconfiguring it, as well as updating software on client machines. Innovative software called Electronic software distribution (ESD) is now available allowing managers to install software remotely on client machines over the network without physically touching the client computer saving a lot of time (Dennis, 2002)

Performance and Fault Management

Performance and fault management are two functions that need to be continually monitored in the network. Performance is concerned with the optimal settings and setup of the network. It involves monitoring and evaluating network traffics, and then modifying the configuration based on those statistics. (Chiu & Sudama 1992)

Fault management is preventing, detecting and rectifying problems in the network, whether the problem is in the circuits, hardware or software (Dennis, 2002) Fault management is perhaps the most basic function, as users expect to have a reliable network whereas slightly better efficiency in the network can go unnoticed in most cases.

Performance and fault management rely heavily on network monitoring which keeps track of the network circuits and the devices connected and ensures they are functioning properly (Fitzgerald & Dennis 1999).

End User Support

End user support involves solving any problems that users encounter whilst using the network. Three main functions of end user support is resolving network faults, solving user problems and training end-users. These problems are usually solved by going through troubleshooting guides set out by the support team. (Dennis, 2002)

Cost Management

Costs increase as network services grow this is a fundamental economic principle (Economics Basics: Demand and Supply, 2006) Organisations are committing more resources to their networks and need an effective and efficient management in the place to use those resources wisely and minimise costs.

In cost management the TCO (total costs of ownership) is used to measure how much it costs for a company to keep a computer operating. It takes into account the costs of repairs, support staff that maintain the network, software and upgrades as well as hardware upgrades. In addition to these costs it also calculates wasted time, for example the cost to the store manager, whilst his staff learn a newly implemented computer system. This inclusion of wasted time is widely accepted however many companies dispute whether it should be included. NCO (network cost of ownership) focuses on everything except wasted time. It exams the direct costs rather than invisible costs such as wasted time.

The Internet RFC 789 Case Study 1

The ARPANET (Advanced Research Project Agency NETwork) was the beginning of the Internet; a network of four computers which was put together by the U.S. Department of Defence in 1969. On the 27th of October, 1980 there was an unusual occurrence within ARPANET. For several hours the network appeared to be unstable, due to a high priority processes that was executing to the detriment of the system. It later expanded to a faster and more public network called NSFNET (Network Science Foundation Network) This network then grew into the internet as we know it today. On October 27th, 1980 the ARPAnet crashed for several hours, due to high priority processes that were executing exhausting system resources and causing down time within the system. (Rosen, 1981)

With today’s network management tools the system failure could have been avoided. Network manager responsibilities such as planning, organizing, directing, controlling and staffing (Dennis, 2002) would have allowed the situation to be handled correctly had these tools been available. The case study RFC789 by Rosen summarises that the main problems the managers experienced were the initial detection that a problem existed and the control of the problematic software/hardware. Assuming they were available, the managing responsibilities would have allowed for a much quicker and efficient recovery of the system. However if careful planning and organising had been carried out when the system was implemented the crash might have been avoided completely.

Mobile Computing: Personal perspective

People choose to buy and use mobile computing devices for numerous reasons. These reasons are often much simpler then the business reasons because they are mainly to do with each persons own personal preference. People’s reasons range from wanting to keep in touch with others constantly, to be able to surf the internet for information whilst out, keeping up to date with schedules and organize their lives and wanting to have WLAN in their home so they can easily sit their laptop at the dining table or the TV whilst using it, plus many more.People wish to make their lives easier and more convenient and these wishes have created devices such as the organizers, video camera phones, and car kits.

How VPN services work

A Virtual Private Network (VPN) is a new technology that creates a secure tunnel through the internet. Each node on the VPN network will have a VPN device which is a specially designed router or hub switch this creates an invisible tunnel through the internet. The VPN device at the senders end takes the split up data called packets or frames and encapsulates it with a VPN frame so it knows how to process the frame (put the split data back together) This encapsulation is quite complex since it travels through an unsecured network like the public internet.

VPN services start with a user connecting a VPN device to at an ISP via a modem. Next the user’s computer generates a piece of data such as a web request message which is in HTTP protocol. This data then goes through the OSI model layers of transport and network adding TCP and IP packets. A data link layer protocol is then added for example a dial up protocol used is Point-to-Point Protocol (PPP) At this point the web request is ready for transmission under normal non VPN environments. But the VPN device encrypts the frame and encapsulates it with a VPN protocol such as Layer Two tunnelling Protocol (L2TP) The VPN device then places another internet protocol around the packet so the packet can travel through the internet and find the required VPN device. The frame which now has its final IP encapsulated (inside a L2TP which has a PPP, TCP, IP and then a HTTP) is now ready for secure transmission through the internet. As the packets reach the destination the process is reversed stripping each protocol down as it goes through the different devices.

There are three types of VPN: Intranet, Extranet and access. An intranet VPN provides virtual circuits between organisation offices or departments in neighbouring buildings. For example the ECU computer labs use a physical intranet; a virtual intranet is one which uses the internet to connect, where ECU uses CAT 5 cable. An extranet VPN is the same as an intranet VPN only it connects computers through different organisations. An access VPN enables employees to access an organisation network from a remote location as though the were inside the building.

Reference:

Carr, H. H. & Synder, C. A. (2007) Data Communications & network security. United States of America: McGraw-Hill/Irwin pg 124-129

Dennis, A. (2002). Networking In The Internet Age Application Architectures. United States of America: John Wiley and Sons, Inc

Dostálek, L., & Kabelová. A. (2006). Understanding TCP/IP. Retrieved August 6, 2006 from http://www.windowsnetworking.com/articles_tutorials/Understanding-TCPIP-Chapter1-Introduction-Network-Protocols.html

Transmitting a message in computers through the OSI Layer

The Open Systems Interconnection (OSI) is a network model it is a framework of standards containing three subsections with a total of seven inner layers. The OSI is a network model and provides a set way for computers and devices to ‘talk’ to each other as a means of avoiding compatibility issues. During the 1970s, computers started to communicate with each other without regulation. It was not until the late 1970s that the speed of this transmission started to increase and standards were created. These standards consist of the group of three application layers the two internetwork layers and the two hardware layers.

When a message is transmitted from one computer to another through these seven layers protocols are wrapped around the data, the layers in the network use a formal language or protocol that is a set of instructions of what the layer will do to the message, these protocols are labelled or encapsulated onto the data. You could think of the protocols as layers of paper with a message that only the individual layer understands. Each layer handles other aspect of the connection these will be discussed below.

The first layer is the application layer it controls what data is submitted and deals with communication links such as establishing authority, identifying communication partners and the level of privacy. It is not the interface of what the user sees, the client program creates this. When a user clicks a web link the software on the computer which understands HTTP (such as internet explorer and Netscape communicator) transfers it into a HTTP request message.

The presentation layer may perform encryption and decryption of data, data compression and translation between different data formats. This layer is also concerned about displaying formatting and editing user inputs and outputs. A lot of requests such as website requests do not use the presentation layer also there is no software installed at the presentation layer and is therefore rarely used.

The session layer as the name suggests deals with organisation of the session. The layer creates the connection between the applicants, enforces the rules for carrying session and if the session does fail the layer will try to reinstate the connection. When computers communicate they need to be in synchronisation so that if either party fails to send information the session layer provides a synchronisation point so the communication can continue.

The transport layer ensures that a reliable channel exists between the communicating computers. The layer creates smaller easy to handle packets of data ready for transmission in the data link layer, it will also translate the address into a numeric address ready for better handling on the lower levels. The protocols that the transport layer uses must be kept the same in all computers. It is in this layer that protocols such as Transport Control Protocol (TCP) is used this protocol allows computers running different applications and environments to communicate effectively.

The website request now has been encapsulated with two different protocols, HTTP and TCP and almost ready to move around in the network. The network layer routes the data from node to node around the network as multiple nodes in the network exist and will avoid a computer if it not passing packets on. Any computer connected to the Internet must be able to understand TCP/IP, as it is the internetwork layers that enable the computer to find other computers and deliver messages to them.

The IP packet, containing the TCP and HTTP protocols all inside one another is now ready for the data link layer. The data link layer manages the physical transmission in next layer. The data link layer decides when to transmit messages over the devices and cabling. The data link layer also allocates stop and start markers onto the message and detects and eliminates any errors that occur during transmission. This is because the next layer sends data without understanding its meaning. A protocol called and Ethernet frame is wrapped around the message and passed onto the physical layer.

It is in the physical layer that the Ethernet frame (and the other protocols inside Ethernet frame) is transferred into a digital signal consisting of a series of ones and zeros (Binary) and through cabling the website request message is sent to the website server. When the server receives the web request message the whole process is reversed. The Ethernet frame is “unpacked” going back through each layer until it reaches the application layer and the message is read. The process is then started again as the web page requested is sent back in another message, to the person requesting it.

Reference:

Carr, H. H. & Synder, C. A. (2007) Data Communications & network security. United States of America: McGraw-Hill/Irwin pg 124-129

Dennis, A. (2002). Networking In The Internet Age Application Architectures. United States of America: John Wiley and Sons, Inc

Dostálek, L., & Kabelová. A. (2006). Understanding TCP/IP. Retrieved August 6, 2006 from http://www.windowsnetworking.com/articles_tutorials/Understanding-TCPIP-Chapter1-Introduction-Network-Protocols.html

A repeater, bridge router and gateway

The repeater, bridge, router and gateway are all pieces of network equipment that work at various levels of the OSI model performing different tasks. The repeater network device exists in the physical layer of the OSI model and is the cheapest of all the mentioned devices. A repeater can be thought of as a line extender as connections on mediums such as 10BaseT and 100BaseT become weak beyond distances of 100 meters. The repeater receives a signal in an analog environment and replicates it to form a signal that matches the old one. In a digital environment the repeater receives the signal and regenerates it. Using a repeater in a digital network can create strong connections between the two connecting joins since any distortion or attenuation is removed. Unlike routers repeaters are restricted to linking identical network topology segments ie a token-ring to a token ring segment. Repeaters amplify whatever comes in and extends the network length on one port and sends out to all other ports (there is no calculation to find the best path to forward packets). This means that only one network connection can be active at a time.

A bridge is an older way of connecting two local area networks or two segments (subnets) of the same data link layer. A bridge is more powerful than a repeater as it operates on the second layer (data link) of the OSI network model. Messages are sent out to every address on the network and accepted by all nodes. The bridge learns which addresses are on which network and develops a routing or forwarding table so that subsequent messages can be forwarded to the right network. There are two types of bridge devices; a transparent hub bridge and a translating bridge. A translating bridge will connect two local area networks (LAN) that use different data link protocols. By translating the data into the appropriate protocol ie from token ring to Ethernet network. A transparent hub bridge will perform the same functions as a translating but will only connect two LANs that use the same data link protocol.

Routers are used in the majority of home networks today and are placed at the gateways of networks. They are used to connect two LAN’s together (such as two departments) or to connect a LAN to an internet service provider (ISP). Routers use headers and forwarding tables like a bridge to determine the best path for forwarding the packets. Routers are more complex than bridges and use protocols such as internet control message protocol (ICMP) to communicate with each other and to calculate the best route between two nodes. A router differs as it ignores frames that are not addressed to the router and use algorithms and protocols that allow them to send packets to the best possible path. A router operates at the third OSI layer (network layer) and can be dynamic or static. Once a static routing table is constructed paths do not change. If a link or connection is lost the router will issue an alarm but will not be able to change the path of traffic automatically unlike dynamic routing. Routers are slower than bridges but routers are more powerful as they can split and reassemble frames receiving them out of order also they can choose the best possible route for transmission, these extra features make routers more expensive than bridges.

Gateways connect networks with different architectures by performing protocol conversion at the application level. Gateway is the most complex device operating at all seven layers of the OSI model. Gateways are used to connect LAN’s to mainframes or connect a LAN to a wide area network (WAN) Gateways can provide the following things:

Connect networks with different protocols

Terminal emulation so workstation can emulate dumb terminals (have all computer logic on a server machine)

Provide error detection on transmitted data monitoring traffic flow.

File sharing and peer to peer communications between LAN and host.

Reference:

Carr, H. H. & Synder, C. A. (2007) Data Communications & network security. United States of America: McGraw-Hill/Irwin pg 124-129

Dennis, A. (2002). Networking In The Internet Age Application Architectures. United States of America: John Wiley and Sons, Inc

Dostálek, L., & Kabelová. A. (2006). Understanding TCP/IP. Retrieved August 6, 2006 from http://www.windowsnetworking.com/articles_tutorials/Understanding-TCPIP-Chapter1-Introduction-Network-Protocols.html

Client Server Architectures, technical differences

Client server architectures are one of the three fundamental application architectures and are the most common architecture for the internet. Work done by any program can be categorised into four general functions: Presentation logic is the way the computer presents the information to the user and the acceptance of user’s inputs.

Application logic is the actual work performed by the application

Data access logic is the processing required to access data ie queries and search functions

Data storage logic is the information that applications need to store and retrieve.

Client server architectures split this application logic up so work is separated between servers and clients. (Dennis, 2002)

The simplest of client server architectures is the two-tiered architectures. Two-tiered architectures have only clients and servers and split the application logic in half. With presentation logic and application logic kept on the Client computer whilst the Server manages the data access logic and data storage. Both a disadvantage and a advantage of client server architectures is the fact they enable software and hardware form different vendors to be used together. This creates a disadvantage as it is sometimes difficult to get different software to work together. This can be resolved using middleware software that sits between application server and client. This creates a standard way of communicating so software from different vendors can be used. It also enables easier installation if the network layout change occurs or is updated (a new server is added) as the middleware software tells the client computer where the data is kept on the server computer.

Three-tiered architectures make use of three computers and typically separates presentation logic, application logic and combines data access logic and storage together. Whilst a n-tier architecture uses more than three sets of computers and usually spreads the logic on to separate computers sometimes spreading application logic across two or more different servers. The primary advantage of a n-tier client server architecture is it separates out the processing that occurs so that the load on the different server is more scalable. On a n-tiered architecture there is more power than if we have a two-tier architecture (as there are more servers) if an update is required we simply add a server, instead of replacing a whole machine. N-tier and three-tiered architectures do have certain disadvantages. Greater load is placed on the network this is because there are more communication lines and nodes in the network. Because of this a lot of network traffic is generated therefore a higher capacity network is required. Also n-tier networks are much more complex and it is more difficult to program and test software due to once again the number of communication lines.

Reference:

Carr, H. H. & Synder, C. A. (2007) Data Communications & network security. United States of America: McGraw-Hill/Irwin pg 124-129

Dennis, A. (2002). Networking In The Internet Age Application Architectures. United States of America: John Wiley and Sons, Inc

Dostálek, L., & Kabelová. A. (2006). Understanding TCP/IP. Retrieved August 6, 2006 from http://www.windowsnetworking.com/articles_tutorials/Understanding-TCPIP-Chapter1-Introduction-Network-Protocols.html